Last time I have

introduced the Fluent NHibernate, this time I would like to move further and show you how to use Conventions and AutoPersistenceModel.

But before we move to the point lets take a quick look on things that have changed in our sample web application:

1) Global.asax

NHibernate SessionFactory is created only once, on application start, then it is reused to create Sessions for each request. This approach allows us to use NHibernate Session in code behind of ASP.NET pages.

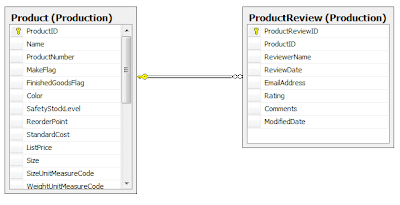

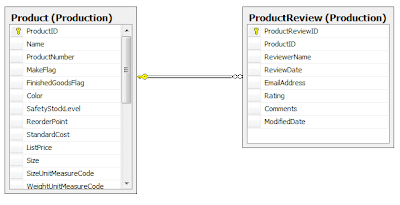

2) To demonstrate more interesting things I had to add one more table, so now we are playing with two tables:

I am, of course, still using AdventureWorks database. With a new table and relations between those two tables, our mappings have changed:

1: public class ProductMap : ClassMap<Product>

2: { 3: public ProductMap()

4: { 5: WithTable("Production.Product"); 6: Id(x => x.Id, "ProductID");

7:

8: Map(x => x.SellEndDate);

9: .

10: .

11: .

12: Map(x => x.ModifiedDate).Not.Nullable();

13:

14: HasMany<ProductReview>(x => x.ProductReview).WithKeyColumn("ProductID").AsBag().Inverse(); 15: }

16: }

1: public class ProductReviewMap : ClassMap<ProductReview>

2: { 3: public ProductReviewMap ()

4: { 5: WithTable("Production.ProductReview"); 6:

7: Id(x => x.Id, "ProductReviewID");

8:

9: Map(x => x.Comments).WithLengthOf(3850);

10: Map(x => x.EmailAddress).Not.Nullable().WithLengthOf(50);

11: Map(x => x.Rating).Not.Nullable();

12: Map(x => x.ReviewDate).Not.Nullable();

13: Map(x => x.ReviewerName).Not.Nullable().WithLengthOf(50);

14: Map(x => x.ModifiedDate).Not.Nullable();

15:

16: References(x => x.Product, "ProductID");

17: }

18: }

Please note that I have skipped some irrelevant parts of the mappings but you can download the sample project to get source code. A bit of clarification:

- Product can have 0 or multiple reviews. From the code point of view, Product class has additional IList property.

- Review is about a product, therefore there is a not null foreign key in the ProductReview table.

ConventionsNow we can move to the point ... as you can see in above mappings, in some places it's required to specify column name or table name. This is because AdventureWorks database doesn't follow the default convention. (check

Convention over Configuration design pattern) Differences:

- table name is different then class name (Product vs Production.Product)

- id property has different name then primary key column (Id vs ProductID)

- properties representing links between tables have different names then foreign key column names (Product vs ProductID)

Luckily for us we don't have to repeat the same changes for all our mappings ... we can change the default convention ... here is how it can be done:

1: var models = new PersistenceModel();

2:

3: // table name = "Production." + class name

4: models.Conventions.GetTableName = type => String.Format("{0}.{1}", "Production", type.Name); 5:

6: // primary key = class name + "ID"

7: models.Conventions.GetPrimaryKeyNameFromType = type => type.Name + "ID";

8:

9: // foreign key column name = class name + "ID"

10: //

11: // it will be used to set key column in example like this:

12: //

13: // <bag name="ProductReview" inverse="true">

14: // <key column="ProductID" />

15: // <one-to-many class="AdventureWorksPlayground...ProductReview, AdventureWorksPlayground, ..." />

16: // </bag>

17: models.Conventions.GetForeignKeyNameOfParent = type => type.Name + "ID";

18:

19: // foreign key column name = property name + "ID"

20: //

21: // it will be used in case like this:

22: // <many-to-one name="Product" column="ProductID" />

23: models.Conventions.GetForeignKeyName = prop => prop.Name + "ID";

24:

25: models.addMappingsFromAssembly(typeof(Product).Assembly);

26: models.Configure(config);

and our mapping can be simplified to this:

1: public class ProductReviewMap : ClassMap<ProductReview>

2: { 3: public ProductReviewMap()

4: { 5: Id(x => x.Id);

6:

7: Map(x => x.Comments).WithLengthOf(3850);

8: Map(x => x.EmailAddress).Not.Nullable().WithLengthOf(50);

9: Map(x => x.Rating).Not.Nullable();

10: Map(x => x.ReviewDate).Not.Nullable();

11: Map(x => x.ReviewerName).Not.Nullable().WithLengthOf(50);

12: Map(x => x.ModifiedDate).Not.Nullable();

13:

14: References(x => x.Product);

15: }

16: }

: API for conventions was changed completely therefore code which is above is no longer valid, you can find update in this post -

Conventions After RewriteAutoPersistenceModelIn fact ... in our mappings, there is not much left ... for sure you won't find there anything particularly creative therefore why not get rid of it completely? Yes ... it's possible ... if everything is 100% in accordance with the convention then you can simply use the following code to get NHibernate configured:

1: var models = AutoPersistenceModel

2: .MapEntitiesFromAssemblyOf<ProductReview>()

3: .Where(t => t.Namespace == "AdventureWorksPlayground.Domain.Production" );

4:

5: models.Conventions.GetTableName = prop => String.Format("{0}.{1}", "Production", prop.Name); 6: models.Conventions.GetPrimaryKeyNameFromType = type => type.Name + "ID";

7: models.Conventions.GetForeignKeyNameOfParent = type => type.Name + "ID";

8: models.Conventions.GetForeignKeyName = prop => prop.Name + "ID";

9:

10: models.Configure(config);

And that is all what you need ... a few POCO objects representing database tables, AutoPersistenceModel and you are ready to go. For sure it allows you to start very fast with development but what worries me is that there is no way to say that some properties are mandatory or have length limit. Specifying those additional data may help you to discover data related problems faster and moreover, it should increase performance of the NHibernate ... but is it worth it? What do YOU think?

(

EDIT: Examples in this post have been updated on 6.02.2009 to reflect changes in Fluent NHibernate API)

Links:Other interesting posts about Conventions and AutoPersistenceModel:

Okey, let's move to the point ... in my opinion the best IT blog of 2008 is Jeff Atwood's Coding Horror!

Okey, let's move to the point ... in my opinion the best IT blog of 2008 is Jeff Atwood's Coding Horror!